Test-Driving Sun's Prototype Display Grid

First introduced in 1957, we often associate time-sharing with logging into remote servers via a character-based terminal to execute jobs on those servers[1] [2] [3] [4]. Although the first time-sharing systems matched that description, time-sharing is a much broader concept, and is an extension of the multitasking capability present in all modern operating systems. While multitasking shares a single processor between multiple independently running OS processes, time sharing services, in addition, allow those processes to run on behalf of different users.

Recent time sharing systems are not based on character terminals. For instance, the Java Virtual Machine provides time-sharing services via the Java Authentication and Access Control (JAAS) framework. JAAS allows Java threads to execute in the VM on behalf of authenticated users, facilitating a multi-user, multitasking VM environment[5] [6].

Web servers also provide the conceptual equivalent of time-sharing: When you purchase a book from Amazon.com, for instance, your purchase request is represented with a thread of control on a remote Amazon server - a thread executing on your behalf, and sharing the CPU's resources with many other concurrent users for the duration of processing that request. Although such requests represent very short periods of CPU time, they operate on the same fundamental principle of sharing a centrally managed resource among users.

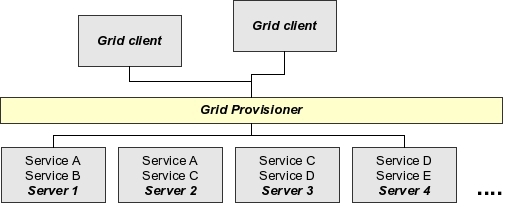

While a single time-sharing system allows many users to share one server's CPU time and other computing resources, grid computing brings that sharing to the level of a network of servers. In a grid, parts of a computing task are parceled out among several independent servers. For instance, user authentication might be performed by one server, while a CPU-intensive calculation may be scheduled on another server's CPU, all the while data storage may be provided by yet another server's file system.

What sets a grid apart from other distributed systems is that in a grid such resources are by design shared among many users, often belonging to different organizations and administrative domains[7]. A grid computing infrastructure, in turn, mitigates access to all those resources, providing basic time-sharing services, such as scheduling, resource allocation, or concurrency control, for a set of shared servers.

The Emancipation of Services

Each grid service may be defined via interfaces or protocols following widely accepted standards. For instance, user authentication may be specified via a Kerberos-based authentication facility, resource metadata may be offered via LDAP[8], and file systems may be provided via NFS[9] or Samba[10]. As long as grid services adhere to agreed-upon standards, implementations of each service become interchangeable.

That, in turn, emancipates a service from a server: Since clients rely on logical service interfaces, not on access to specific servers, a client need not know what servers provide the required service. Instead, a client can depend on the grid provisioning software to find the server with the least load, or highest availability, or lowest service latency, based on the grid's policy.

The emancipation of services provides advantages in scaling up a grid: To increase storage space, a grid administrator can swap a Samba server with another server providing more available disk space, for instance. Or, to offer more robust user authentication, an administrator may provision several authentication servers on the grid, offering the grid provisioning software a larger pool of available resources to choose from. In addition to improving system throughput, provisioning multiple redundant servers for a service also improves that service's availability[11].

The flip-side of the emancipation of services from servers is that each server can specialize in offering one or a handful of well-defined services. That dovetails with the trend of commodity server hardware, since many grid services require relatively modest computing resources. Servicing LDAP queries, for instance, can easily be performed on inexpensive server hardware, especially if many commodity servers can share in the load of offering that service.

Specialized servers also reduce administrative costs. Since a single grid server can focus on providing just one or, at most, a few services, that server's administrator has to deal with less software configuration, monitoring, and upgrades. Replacing a failed server also becomes easier, since configuring and provisioning the replacement can be streamlined, if not completely automated.

Desktop Complexity

Server specialization is the exact opposite of today's desktop computing environments. Instead of specializing on a handful of services, desktop operating systems today compete in offering an increasing number of increasingly complex features. Such features are created in response to a sophisticated and demanding user base: Most desktop users are no longer satisfied with being able to compose simple documents in a text editor, but want their computers to be able to access services on the Web, manage digital photos and music, and, more recently, to serve as home entertainment hubs. Mobile computers impose additional demands on a desktop environment, such as the ability to offer access to wireless hotspots, and to provide security and recovery features.

Those capabilities are provided by cooperating tasks, such as user authentication, file system access, window management, and so forth. Each task contributing to a desktop session, in turn, is often represented by an independent operating system process, such as the window manager process, the file system mount daemon, the user authenticator, and even the processes that forward mouse and keyboard input to the operating system.

In a traditional desktop environment, those processes must be installed, configured, and managed, on a local computer. As a result, instead of specialization, today's PCs are the result of integration: of bundling a myriad of services and associated software on each desktop. Such integration efforts have enabled users with richer features, but only at the cost of leaving users with an increasingly complex desktop environment to manage. A recent ZDNet UK article quotes research showing that

a PC can cost up to 25 times its purchasing price over a five-year period, particularly when calls to help desks escalate due to bad desktop management. An average call querying the desktop lasts 17 minutes, of which nine are spent simply identifying hardware and software[ 12].

The Desktop as a Grid Service

Although the desktop paradigm has come to represent access to a single computer, the processes providing a desktop session's capabilities can be distributed to servers on a grid. For instance, one server may perform user authentication, another may offer the user access to a filesystem, and yet another can provide the window manager. In that manner, a desktop user session lends itself to grid-based distribution.

Such distribution pushes the complexity of running and managing the services that make up a desktop session to the network, alleviating users from desktop management chores. Hence, a grid-based desktop transforms the problem of software installation and maintenance to that of provisioning networked services.

A key problem of provisioning the services of a grid-enabled desktop is deciding how much complexity to leave on a user's computer and, concomitantly, what responsibilities to move to specialized servers. The assumption is that users, in general, are bad at managing desktop complexity, whereas dedicated servers provisioned via grid middleware can excel at that task.

Distributed desktop platforms in use today can be categorized according to their distribution of computational responsibilities between client and server. Among the most popular distributed desktop environments today include X Windows[13], Citrix's MetaFrame product[14] and GoToMyPC[15], Microsoft's RDP-based remote desktop[16], the Virtual Network Computer (VNC)[17], the research prototype THINC[18], and Sun's Sun Ray product line[19].

Some, like X windows, require much client-side resources and maintain lots of computational state at the client. Others, such as VNC, are implemented in software, and run as applications on top of a full-fledged OS. Sun's Sun Ray, the focus of this article, represents another extreme with no client-side state, and very minimal client-side computing.

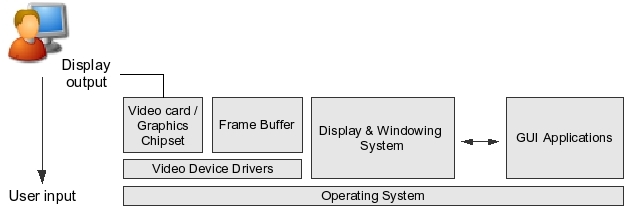

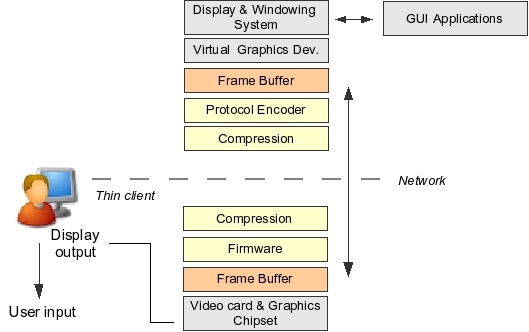

To appreciate the available distribution choices, it is helpful to illustrate the key components of a non-distributed desktop residing on a user's PC:

To keep the above illustration simple, the diagram limits user input and output to a display device, such as a CRT or LCD monitor, and does not consider keyboard and mouse input, or other peripherals. The user's display typically connects to the PC hardware via a VGA or DVI connector. The display hardware inside a PC comprises a set of graphics chips, including a memory area reserved for buffering the complete bitmapped image raster that's sent to the monitor. Such a frame buffer, or video memory, may be part of a dedicated video adapter, but in some cases the buffer shares the PC's main memory. The raster image stores the display information with a specified resolution and color depth. Images defined in the RGB color space, for instance, typically require at least 3 bytes of memory per pixel, one byte each for red, green, and blue. Thus, a 24-bit color frame at a resolution of 1280x1024 pixels requires 3.84 MB of buffer memory.

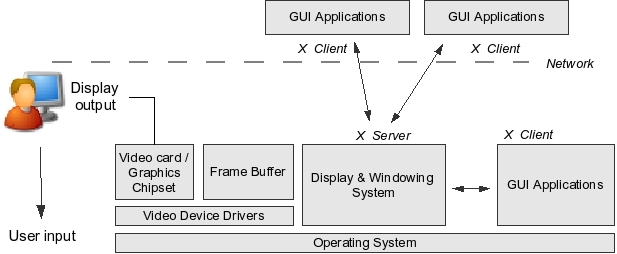

One way to divide on the network the key building blocks of a desktop session is along client-server lines. That is the route the X Window system chose1. In dividing the responsibilities of client and server, X Windows starts with the perspective of the local display. In X terminology, the local display is the server, providing services to X clients connecting to that server across the network. X's clients are the remote applications a user wishes to access. The X server accepts graphical output requests from a remote client, and processes those request by displaying the remote program's window, for instance. In addition, the server on the local host forwards keyboard and mouse events to the client.The X Windows specifications define a communication protocol between client and server. The consequence of this client-server architecture is that server (the user's local host) and client must both run appropriate portions of the X Windows code so that they can communicate via X protocol messages.

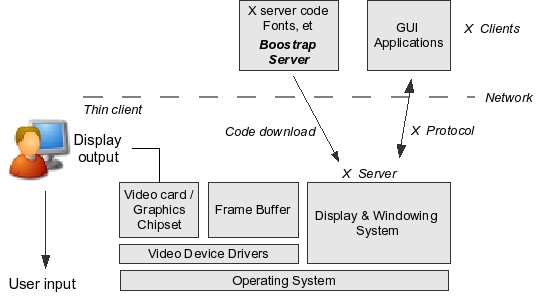

An X client or, more commonly, an X server can be implemented in either software or hardware. The advantage of a hardware-based implementation is that no software needs to be installed on a user's workstation. Instead, users can take advantage of a thin client that requires no or little maintenance. An X Windows-based thin client runs the X server code, and the user can invoke applications executing on remote servers to connect back to the thin client's display.

The main disadvantage of an X Windows thin client has to do with the size of an X server infrastructure. A thin client running an X server needs not only code to interpret X protocol commands, but also code and additional artifacts to be able to act on those commands and create a functional display. That includes the widget toolkit used to draw windows, the fonts to display text, as well as a window manager - code that can add up to significant size.

While a thin client can download all that code from a centralized server at boot time, processing and managing that code still requires significant CPU power and memory. That increases the cost of a thin-client device, and also puts the burden of managing not only X clients, but also the downloadable boot X server on an administrator's shoulders. In addition, a thin client provider would have to perform network bandwidth, latency, and error recovery optimizations in the context of the X protocol, effectively limiting client-server performance.

Cache Your Pixels

A different approach to distributing a desktop's services on the network starts not with a client-server architecture, but with the well-known technique of data caching used to speed up access to networked information. In many enterprise applications, for instance, clients often maintain a local cache of frequently accessed data in order to avoid the overhead of performing queries each time similar data is requested.

In a graphical desktop, the raster image data rendered by the operating system and graphics chipset is buffered to the video memory, or frame buffer. Instead of sending that data to the display, the buffered raster data can be distributed on the network in a replicated cache, with a master copy on a server, and a secondary copy on a thin client. Updates made to the screen are first copied into the server's cache, and then are immediately replicated to the thin client's cache. That architecture almost completely pushes desktop computation to the network, and leaves to a user's system only the task of keeping the local buffer cache in sync with the server's buffer.

The problem of keeping the distributed pixel caches in sync between the desktop client and a server is orthogonal to the type of windowing system used, and even to the operating system. Such a mechanism can be implemented on any operating system or windowing system by means of a virtual graphics device that, instead of pushing a bitmap raster to the display, pushes those rendered images into the server-side of the distributed cache, which then takes care of synchronizing local and remote cache content.

Successful cache replication depends on a high hit-to-miss ratio: The less the screen changes, the less data needs to transfer from server to thin client. That fits the pattern of most desktop usage well: in a typical desktop session, most desktop UI changes occur in response to user actions. And user actions are relatively sparse.

Typing text in a word processor, for instance, causes very few changes on the screen - mostly just in the screen area of the newly typed text. The rest of the screen pixels remain the same, requiring relatively small amounts of data to update the thin-client's screen buffer. The worst-case scenario is a full-screen movie. A typical uncompressed MPEG 1-encoded movie with 25 frames per second, in 24-bit color and with a display resolution of 1280x1024 pixels, would require a sustained bandwidth of approximately 750 Mb/s. Compression would reduce that bandwidth requirement. In between these extremes are user actions such as dragging a window or scrolling inside windows.

Even with many screen changes, the server's cache update mechanism can intelligently compute the difference between two consecutive screen updates. For instance, some white pixels may stay fixed between two screen updates even when scrolling text in a window. The server can compute such differences via a bit-by-bit comparison of two rasters, and send only the minimal updates necessary to the client.

Screen updates may be communicated between client and server via a protocol that aims to minimize the amount of data transmitted. Instead of sending arrays of pixels representing screen changes, drawing primitives can affect bulk updates in the client. For instance, if two screen areas contain the same set of pixels, a COPY command can instruct the client to copy one rectangular screen area to another screen location. Or, if a screen area consists of similar pixels, a FILL command could cause the client to fill that area with the specified pixel.

Such protocol primitives allow protocol optimizations specific to thin client and server communication, and are independent of the original display protocol, such as X. They also facilitate graceful degradation of display quality in the face of communication obstacles, such as high packet loss ratio. The server, noticing the dropped packets, could re-transmit only the updates most essential to maintaining usability. As well, communication between client and server can be compressed to further reduce bandwidth requirements. That leads to a design depicted in Figure 5:

The communication protocol between a thin client and the server determines not just the amount of required bandwidth, but also the division of computational responsibilities between thin client and server. For instance, one objective of the protocol's design might be to minimize the computation cost of executing the protocol on the client, allowing for clients with low-cost CPUs, low power consumption, and relatively small amounts of memory.

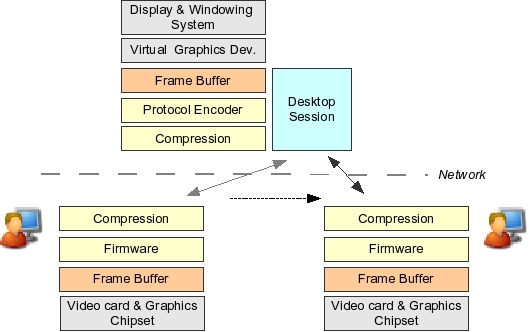

At the same time, placing the bulk of the computational burden on the server allows a service provider to take advantage of the scalability characteristics inherent in the replicated buffer-based thin client design. In addition to distributing on a grid the various services that provide a desktop session's functionality, a service provider can push the thin-client and server protocol processing to a grid as well.

That could take advantage of the parallelism inherent in processing screen updates: Parts of the screen can be handled by independent "compute servers," each server computing a portion of screen updates. Results of those computations can be provided as input to a protocol processor that computes the drawing primitives representing screen changes. Finally, compression can be performed in parallel as well. While communication cost between grid nodes may well dominate such an operation, this example illustrates the scaling potential of the architecture. In essence, this architecture turns the problem of desktop management into a problem of high-performance, high-throughput computing.

This model of thin client computing also provides session mobility: Since all desktop state resides on the server, a user can disconnect from a thin client at any time without having to close the desktop session. Later, the user can log in from another thin client device, and resume the desktop session from the exact state she left it earlier. As in the case of X Windows, a replicated cache-based thin client desktop can be implemented in hardware or software.

Thin Client Grid Computing: Test-Driving Sun's Display Grid

The rest of this article focuses on an implementation of the replicated frame buffer cache paradigm, Sun's Sun Ray[20]. The Sun Ray's architecture is novel in that it pushes almost all computation to the network. Indeed, the Sun Ray client, a small piece of hardware that connects to a keyboard, a mouse, a display, and a network cable, does so little computing that it can operate on about 9W of electricity.

At the heart of the Sun Ray architecture is a communication protocol that relays status between server and client, including information about user authentication and a user's desktop session, sends keyboard and mouse state to the server, forwards audio and peripheral I/O between server and client, and transports screen updates from the server to the thin client. The Sun Ray's local screen buffer is used for display updates, but that cache is treated as ephemeral that can be overridden by the server at any time. Thus, the client is stateless. The firmware in the Sun Ray device contains networking code as well as code specific to the Sun Ray protocol.

| Command | Description |

|---|---|

SET |

Set literal pixel values of a rectangular region |

BITMAP |

Expand a bitmap to fill a rectangular region with a (foreground) color where the bitmap contains 1's, and another (background) color where the bitmap contains 0's |

FILL |

Fill a rectangular region with one pixel value. |

COPY |

Copy a rectangular region of the frame buffer to another location |

CSCS |

Color-space convert a rectangular region from YUV to RGB with optional bilinear scaling |

The latest Sun Ray version at the time of writing provides built-in support for DHCP and virtual private networking, and the Sun Ray protocol was updated to work over low-bandwidth networks. The result is that a user can access a Sun Ray server over a wide-area network, such as a broadband residential connection.

Sun has made remotely hosted desktop sessions available to some of its employees as part of the company's work-at-home program for some time. More recently, Sun started to position the Sun Ray as a way to offer consumers a subscription-based desktop service as well. Since the Sun Ray architecture translates desktop management to a highly parallel, grid computing task, Sun's expertise in high-throughput computing puts the company in an advantageous position to offer such a service.

To determine how well such a remotely hosted Sun Ray desktop can supplant a user's traditional desktop computer or laptop, we performed a four-month evaluation of Sun's prototype hosted desktop environment, a proof-of-concept of a broadband desktop service. The Sun Ray desktop used in this evaluation was hosted on Sun's CXONet facility on the US East Coast, and we have accessed that desktop via a residential DSL connection from California. The workload used in this evaluation consisted of four main categories of applications: an email client (Mozilla Thunderbird), a Web browser (Firefox)[21], a word processor and spreadsheet (OpenOffice)[22], and an IDE to work on source code (IntelliJ IDEA)[23].

Using daily the remotely situated desktop for those task instead of a usual desktop or laptop environment caused no loss of productivity. Because those applications were running on fast servers, the system proved remarkably responsive. Based on causal observation, application startup, for instance, tended to be quicker than on a laptop with a 3.2 GHz Pentium 4 CPU.

At the same time, occasional hick-ups in screen redraw operations remind the user of the network's presence between the display and the remote desktop server. As expected, redraw latency was most pronounced when moving windows around the desktop or scrolling documents in a window: It does take a perceptible amount of time for the Sun Ray to catch up and completely redraw the screen. While a user can get used to that redraw latency, broader consumer adoption of the Sun Ray desktop might require further investigation into how such delays can be reduced, if not completely eliminated.

Bandwidth Versus Latency

In addition to subjective observations, we wanted to measure the Sun Ray's actual bandwidth consumption in the context of using the above applications. In order to gauge the Sun Ray's actual bandwidth use during the above interactive workload, we proceeded to measure the point-to-point peak available bandwidth between the remote Sun Ray server and the Sun Ray device.

While a DSL connection presents asymmetrical network bandwidth, with more bandwidth available for download than for upload, that matches the bandwidth requirements of a Sun Ray session, since most data is transmitted from the server to the thin client device at the network's edge. Thus, we focused on measuring download bandwidth from the remote server.

To eliminate TPC/IP connection startup latency from the measurements, we wrote a simple client-server bulk data transfer application. The server portion of that application ran inside a residential network on a fast laptop running the Fedora Core 4 version of Linux. The client portion, running on the remote Sun Ray server, opened a connection to the server and transfered a specified amount of bytes as character data from the Sun Ray server. To collect representative samples, we transferred 1 MB and 10 MB amounts of bulk data, and repeated each transfer three times at various times of the day, with the following results:

| Bytes Sent | Client's Time | Server's Time | Bandwidth on Client | Bandwidth on Server | |

|---|---|---|---|---|---|

| 1 | 1 MB | 9,085 ms | 9,201 ms | 901.7 Kbps | 890.34 Kbps |

| 2 | 1 MB | 9,117 ms | 9,191 ms | 898.54 Kbps | 891.3 Kbps |

| 3 | 1 MB | 8,648 ms | 8,865 ms | 947.27 Kbps | 925.23 Kbps |

| 4 | 10 MB | 85,382 ms | 85,403 ms | 959.45 Kbps | 959.22 Kbps |

| 5 | 10 MB | 84,891 ms | 84,984 ms | 965 Kbps | 963.95 Kbps |

| 6 | 10 MB | 89,040 ms | 89,049 ms | 920.03 Kbps | 919.94 Kbps |

| Average bandwidth measured: | 932.00 Kbps | 925.00 Kbps | |||

Measurements done on 8/25/05. Solaris 10 CXO Server. Linux 2.6.12, Fedora 4. 3.2 Ghz Intel Pentium 4, 512 MB of memory. Network: SBC Yahoo DSL, 10 Mb/s 801.11/b wireless network, Linksys router. No significant difference detected over a 100Mb/s switched Ethernet.

As the table illustrates, at best the connection offered 965 Kbps of sustained bandwidth; the worst-case bandwidth was 890 Kbps. Given these samples, the average amount of sustained bandwidth between the DSL connection and the remote Sun Ray server was 928.5 Kbps.

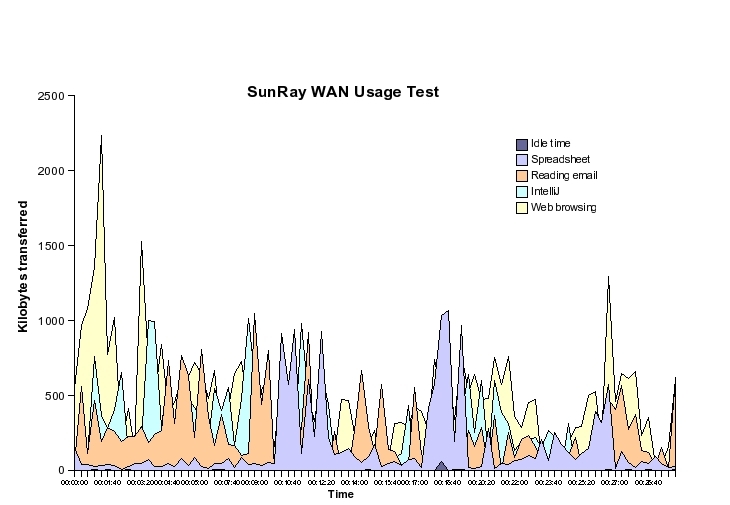

Next, we proceeded to measure the thin client's bandwidth consumption during typical use of each of the four categories of applications listed above. A Sun engineer kindly set up a utility on the Sun Ray server that took a snapshot of the amount of data transferred between the Sun Ray server and the remote thin client device. With this utility collecting measurements, we proceed to work as usual with each of the four types of applications: Using the StarOffice spreadsheet to edit a dataset consisting of several hundred rows; reading and replying to email with Mozilla Thunderbird; developing Java code with IntelliJ IDEA; and browsing the Web with Mozilla Firefox.

We used each application exclusively for 30 minutes and took snapshots of the amount of bandwidth consumed in each 20-second interval. The following diagram shows the amount of data transferred in each measurement period, in each application use session as well as during the idle 30 minutes:

As the diagram indicates, the amount of data transferred from client to server tends to be bursty. Since such data transfers represent mostly screen updates, replacing the local cache's content with new pixel data, most bursts occur when large sections of the screen require updates.

Predictably, Web browsing required the most updates, since the screen had to update not only each time the browser loaded a new Web page, but also during scrolling a page. IntelliJ's bandwidth consumption reflected the loading of new code text, scrolling, and switching between editor windows, as well during running unit tests. During code edits, however, very little screen update activity took place. The email program's bandwidth usage mirrors the pattern of loading a new email message and composing replies in a new window. The spreadsheet exhibits a more uneven usage: While editing spreadsheet data within a single screen view, no scrolling was involved, and hence the editing activity consumed little bandwidth; the peaks represent scrolling activity. Table 3 summarizes these measurements:

| Idle Time | StarOffice Spreadsheet | Reading Email with Thunderbird | Developing with IntelliJ IDEA | Web Browsing with Firefox | |

|---|---|---|---|---|---|

| Total bytes transferred | 321.51 KB | 15,057.7 KB | 22,553 KB | 23,560.08 KB | 43,464.89 KB |

| Avg bandwidth consumed | 1.43 Kbps | 66.92 Kbps | 100.24 Kbps | 104.71 Kbps | 193.18 Kbps |

| Avg of available bandwidth consumed | 0.11% | 7.21% | 10.8% | 11.28% | 20.81% |

| Peak bandwidth consumed | 23.61 Kbps | 428.15 Kbps | 420.06 Kbps | 405.09 Kbps | 894.44 Kpbs |

| Max. of available bandwidth consumed | 2.54% | 46.11% | 45.24% | 43.63% | 96.11% |

The summary table computes the average bandwidth consumed by each activity during each 30-minute session, the peak bandwidth occurring in any 20-second interval, and the relationship of the bandwidth consumed by each activity to the total available bandwidth on the network connection.

While Web browsing required the most screen updates, and hence the most bandwidth, even that activity took advantage of only 20.81% of the total bandwidth available between the server and thin client, with an average bandwidth requirement of 193.18 Kbps. Web browsing also proved the burstiest activity, with the highest peak requiring 96.11% of the available bandwidth. The other usage patterns required much less bandwidth, and proved much less bursty. None of the activities took up the maximum bandwidth available between the Sun Ray server and the thin client at the DSL end of the connection.

These four application categories represent only a subset of desktop usage patterns, and exclude, for instance, multimedia, such as streaming video. RealVideo and the Java Media Player executing on the remote desktop did not compare well to using those applications on a local desktop, suggesting higher bandwidth requirements.

Apart from multimedia applications, however, bandwidth no longer appears to be a limiting factor in broader consumer deployment of the Sun Ray remote desktop service. This experiment suggests that the occasional screen redraw delays are not the result of insufficient network bandwidth between the Sun Ray server and the thin client. Barring bandwidth as the cause leaves network latency as the possible cause of redraw delays. That latency may be caused by the Internet connection between the Sun Ray server and the thin client, and might also be caused by constraints on the server or the thin client to process the Sun Ray protocol commands.

While more measurements are needed to determine the key source of latency, should limitations in processing capability on either the client or server be among the reasons, protocol commands can be optimized to trade more bandwidth for less computational intensity. For instance, compressing less protocol commands would consume more bandwidth between client and server, but would relieve both of some processing work. In addition, much of the server's work can be distributed on a grid to scale up the server's processing ability.Reducing the latency inherent in the Internet connection between server and thin client requires a network topology designed to minimized the hops between the connection endpoints. Such a network might be relatively straightforward to build, and several commercial network providers already operate colocation facilities in close network proximity to most end-users, such as in large metropolitan areas. Placing Sun Ray servers at these locations, and directing a user's session to a server in near proximity to the user could help reduce network latency. Such dynamic redirection, however, would also require possible additions to the Sun Ray protocols to determine which server to direct the user's session's after login.

Future Possibilities

The Sun Ray system currently requires a dedicated thin client device. Since the architecture can be implemented in software as well, should Sun make a Sun Ray client available as a software package, or even open-source that software, possibly any network-connected client could access a remote Sun Ray server.

The Sun Ray architecture's relatively low computation and memory requirements on the client make that an especially attractive option for mobile devices[24] [25] [26]. For instance, a cell phone connected to the network via a cellular network or WiFi could run the Sun Ray software, and render the display to a BlueTooth-enabled display. The cell phone could also integrate a BlueTooth-enabled keyboard and mouse to provide a complete portable desktop experience. Recent developments with foldable displays and miniaturized, expandable keyboards, together with a ubiquitous Sun Ray client, could foreshadow a new era in mobile computing[27] [28].

Acknowledgements

The author would like to thank Brian Foley, Bob Gianni, and Ismet Nesmicolaci, all with Sun Microsystems, Inc., for assistance in evaluating the Sun prototype display grid.Resources

[1] Bob Bemer, best-known as the "father of ASCII" described the time-share concept in 1957. The first system implementing the concept was MIT's Multiple Access Computer, Machine Aided Cognition, or Project MAC.

http://en.wikipedia.org/wiki/Project_MAC

http://www-formal.stanford.edu/jmc/history/timesharing/timesharing.html

[3] D. M. Ritchie and K. Thompson. The UNIX Time-Sharing System. Communications of the ACM, 17, No. 7 (July 1974), pp. 365-375.

http://cm.bell-labs.com/cm/cs/who/dmr/cacm.html

[4] D. M. Ritchie. The Evolution of the Unix Time-sharing System. AT&T Bell Laboratories Technical Journal 63 No. 6 Part 2, October 1984, pp. 1577-93.

http://cm.bell-labs.com/cm/cs/who/dmr/hist.html

[5] The Java Authentication and Authorization Service (JAAS).

http://java.sun.com/products/jaas

[6] D. Balfanz and L. Gong. Experience with Secure Multi-Processing in Java Proceedings of the IEEE International Conference on Distributed Computing Systems, 1998.

http://www.cs.ucsb.edu/~lingli_z/reference/balfanz98experience.pdf

[7] I. Foster, C. Kesselman and S. Tuecke. The Anatomy of the Grid. Enabling Virtual Organizations International Journal of Supercomputing Applications, 2001.

http://www.globus.org/alliance/publications/papers/anatomy.pdf

[8] M. Wahl, et al. Lightweight Directory Access Protocol Internet Engineering Task Force RFC 2251. 1997.

http://www.ietf.org/rfc/rfc2251.txt

[9] Sun Microsystems, Inc. NFS: Network File System Protocol Specification. Internet Engineering Task Force RFC 1094. 1989.

http://www.faqs.org/rfcs/rfc1094.html

[10] SAMBA

http://us3.samba.org/samba/docs/

[11] I. Foster and C. Kesselman, editors. The Grid 2: Blueprint for a New Computing Infrastructure. Morgan Kaufmann, 2003.

Amazon.com link

[12] M. Vernon. Save money by taking control of your desktops TechRepublic, August 23, 2005

http://insight.zdnet.co.uk/business/0,39020481,39214436,00.htm

[13] X Windows.

http://www.x.org

[14] Citrix Access Suite

http://www.citrix.com/English/ps2/products/documents.asp?contentid=12752

[15] Citrix GoToMyPC

http://www.citrix.com/English/ps2/products/documents.asp?contentid=13994

[16] Microsoft Remote Desktop Protocol

http://msdn.microsoft.com/library/?url=/library/en-us/termserv/termserv/remote_desktop_protocol.asp

[17] Virtual Network Computer

http://www.realvnc.com

[18] R. Baratto, J. Nieh and L. Kim. THINC: A Remote Display Architecture for Thin-Client Computing Technical Report CUCS-027-04, Department of Computer Science, Columbia University, July 2004.

http://www.ncl.cs.columbia.edu/publications/ucs-027-04.pdf

[19] Sun Ray Thin Client

http://www.sun.com/sunray/whitepapers.html

[20] B. K. Schmidt, M. L. Lam, and J. D. Northcutt. The Interactive Performance of Slim: A Stateless Thin Client Architecture. Operating Systems Review, 34(5): 33-47., 1999.

http://research.sun.com/features/tenyears/volcd/papers/nrthcutt.htm

[21] Firefox and Thunderbird

http://www.mozilla.org

[22] OpenOffice

http://www.openoffice.org

[23] IntelliJ IDEA

http://www.intellij.com

[24] A. M. Lai, J. Nieh, B. Bohra, V. Nandikonda, A. P. Surana, and S. VarshneyaMobility. Improving Web Browsing Performance on Wireless Pdas Using Thin-Client Computing Proceedings of the 13th ACM International Conference on World Wide Web, 2004.

http://citeseer.ist.psu.edu/lai04improving.html

[25] S. J. Yang, J. Nieh, S. Krishnappa, A. Mohla, and M. Sajjadpour. Mobility and Wireless Access: Web Browsing Performance of Wireless Thin-Client Computing Proceedings of the 12th international conference on World Wide Web, 2003.

http://citeseer.ist.psu.edu/sj03web.html

[26] A. Lai, J. Nieh. Limits of Wide-Area Thin-Client Computing. Proceedings of the 2002 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems, 2002.

http://citeseer.ist.psu.edu/lai02limits.html

[27] P. Sayer. Notebooks to Get Foldable Displays PC World, September 6, 2000.

http://www.pcworld.com/news/article/0,aid,18349,00.asp

[28] L. Valigra. Next Digital Screen Could Fold Like Paper Christian Science Monitor, January 8, 2004.

http://www.csmonitor.com/2004/0108/p14s01-stct.html

Talk back!

Have an opinion? Readers have already posted 8 comments about this article. Why not add yours?

About the author

Frank Sommers is an editor with Artima Developer. He is also founder and president of Autospaces, Inc., a company providing collaboration and workflow tools in the financial services industry.

Artima provides consulting and training services to help you make the most of Scala, reactive

and functional programming, enterprise systems, big data, and testing.

2070 N Broadway Unit 305

Walnut Creek CA 94597

USA

(925) 918-1769 (Phone)